Description

DLSS has been a game changer technology in the past couple of years. Additionally, VR technology has made strides of its own. But what if we could take methods from both and make something new?

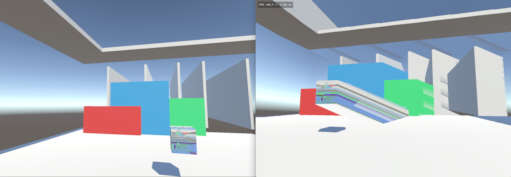

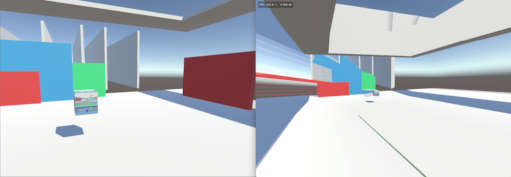

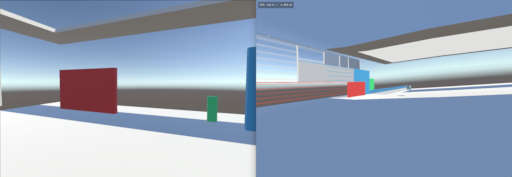

Asynchronous Reprojection takes the concepts of time warp from VR and reconstructing missing data from DLSS in order to interpolate smooth framerates from choppy gameplay. If DLSS frame gen fills in new frames from scratch with AI, then asynchronous reprojection makes new frames by re-using pixel data from old ones.

Controls

- W/A/S/D — Move Camera

- Space — Move Camera Up

- Ctrl — Move Camera Down

- Mouse — Yaw/Pitch Camera

- Grave/Tilde (`/~) — Toggle Client Sync

- -/+ — Increase/Decrease FOV (Default 60)

- Num 1–5 — Change Game Target FPS

- The client (window titled "CS355 Multi-Threaded Spacial Partitioning Demo" — the framework is re-used from the spatial partitioning assignment, and yes it is misspelled) has full control over the application; the Unity window is a dummy window that just runs the "game". Closing either window will close them both.

- The only data transferred between the game and the client is a framebuffer containing the color and depth data as well as the camera's transform encoded in the buffer.

- Data transfer is handled through shared application memory using a memory mapped file. This introduces latency due to memory handoffs between the CPU and GPU, so a more practical method is shared graphics memory.

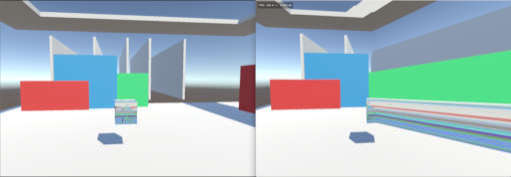

- To get an idea of usefulness, cap the game frame rate at something low (Num 4/5) and make minor adjustments to the camera. Notice how most of the center of the camera updates with ease as it doesn't need to be re-rendered on reprojection.

Gallery

File Downloads

Demo – AsynchronousReprojection.zip